Stanford’s Alpaca AI: A Low-Budget ChatGPT Alternative

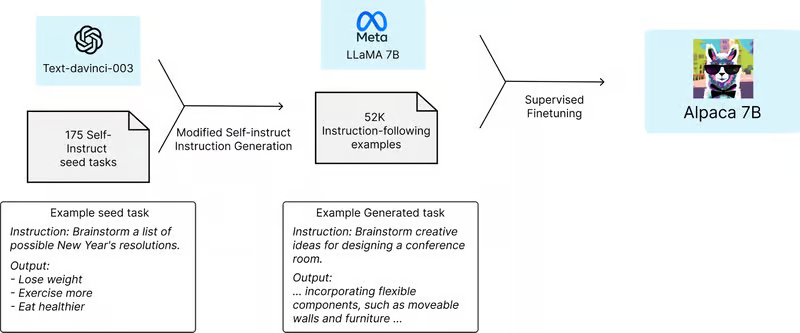

Researchers at Stanford University have developed Alpaca AI, a language model based on Meta’s open-source LLaMA 7B model. With a budget of less than $600, the team managed to train Alpaca AI, achieving performance comparable to ChatGPT. The project highlights the possibility that sophisticated AI models may soon become accessible to a wider audience, albeit with potential risks.

AI Language Models Become Increasingly Affordable

The rapid development of large language models like GPT-4 has transformed AI capabilities, enabling tasks such as writing text or programming code across various subjects. Big companies like Google, Apple, Meta, Baidu, and Amazon are also working on their AI models, which are likely to be integrated into various applications and devices in the near future.

Ensuring AI Safety in Commercial Applications

Organizations like OpenAI are aware of the potential dangers of AI misuse, such as spam, malware creation, and targeted harassment. They invest significant time and effort into ensuring safety measures are in place before launching their AI models. However, concerns remain about governments’ ability to regulate AI development and deployment effectively.

Alpaca AI: A Budget DIY Language Model

The Stanford research team used the open-source LLaMA 7B language model and post-training data generated by GPT to create Alpaca AI. The total cost of the project was less than $600, and the model performed similarly to ChatGPT in various tasks. This achievement demonstrates the potential for rapid expansion and accessibility of AI capabilities.

Uncontrolled AI Models: Potential Risks and Limitations

The development of Alpaca AI raises concerns about the possibility of unlimited, uncontrolled AI language models being created by individuals with machine learning knowledge, regardless of the terms and conditions of companies like OpenAI and Meta. Such unregulated AI models could pose risks if misused by malicious actors or authoritarian regimes.

Update: Alpaca AI Taken Down Due to User Abuse and Rising Costs

In light of growing concerns about the potential misuse of Alpaca AI and the increasing costs associated with maintaining the project, the Stanford research team has decided to take Alpaca AI down at the time of this post. This development underscores the importance of responsible AI development and deployment.

More content at UsAndAI. Join our community and follow us on our Facebook page, Facebook Group, and Twitter.