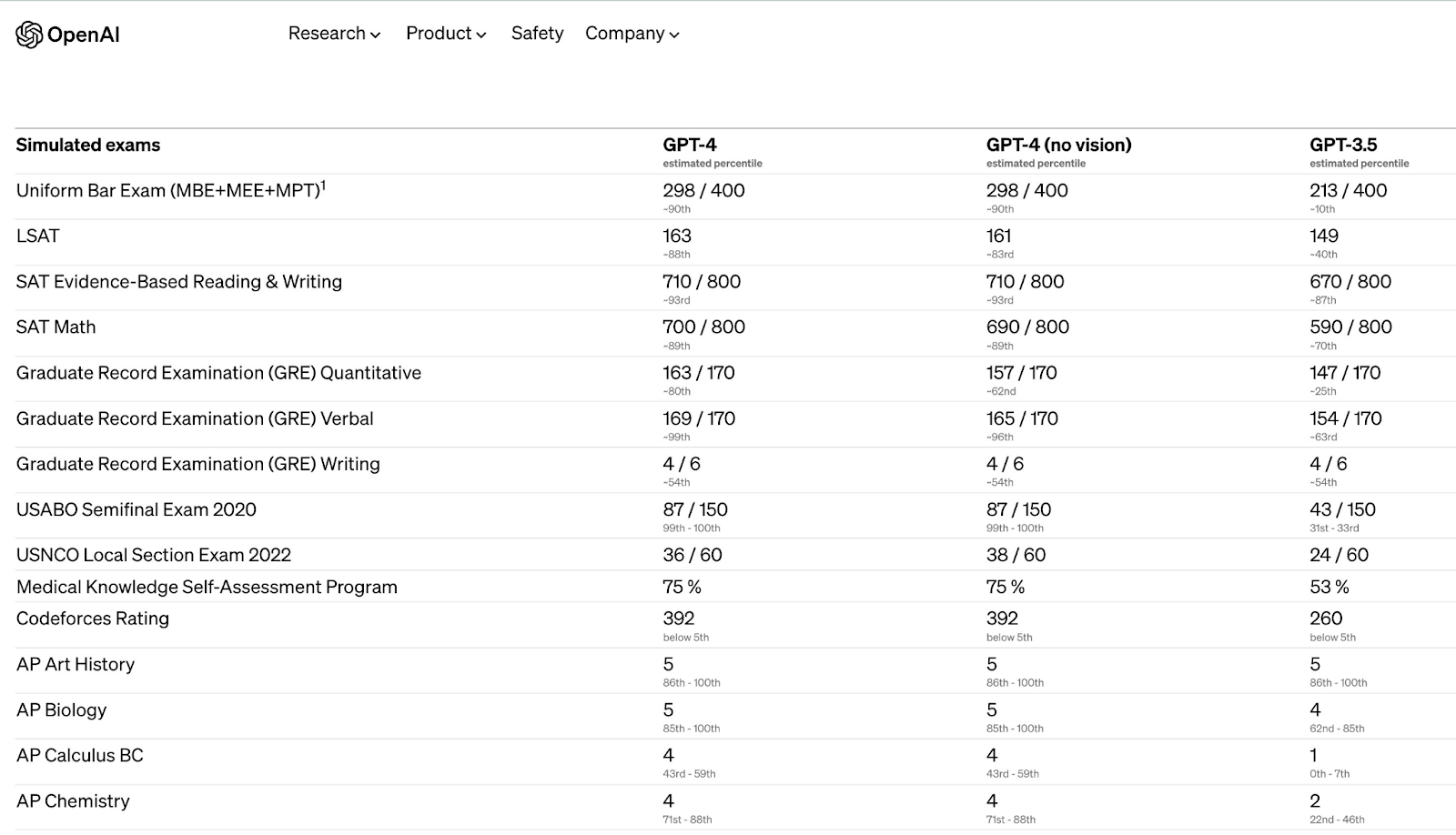

Recently, GPT-4, a new language model that can interpret text and image inputs while producing text outputs, was announced by OpenAI. This new model outperforms human performance on a range of academic and professional metrics(see image below), and it is more trustworthy and innovative than its forerunner, GPT-3.5. This model was rumored to have the ability to create images and video from a text-based prompt, however that is not the case. GPT-4 API (not ChatGPT Plus GPT4) can digest an image and process it through GPT-4 for image recognition and NLP tasks.

With scores in the 90th percentile, GPT-4 is very impressive, however GPT-4 still has its limits, though. It frequently causes “hallucinations,” which are errors in logic and inaccurate information. Consequently, it is crucial to use language model outputs with caution, especially in high-stakes circumstances. Furthermore, GPT-4 has the potential to produce biased results and firmly anticipate the wrong outcomes.

Notwithstanding these restrictions, GPT-4 is a huge step forward for AI technology.

Source:

- OpenAI Research: GPT-4 (https://openai.com/research/gpt-4/)

More content at UsAndAI. Join our community and follow us on our Facebook page, Facebook Group, and Twitter.